Having produced several reduced images of a target object in a given colour, as in the previous two recipes, often the next step is to combine them into a single ‘master’ image of the object in that colour. Such a combined image will have an effective exposure time equivalent to the sum of the individual exposure times of the constituent images and hence will have an improved signal to noise ratio and fainter features will be visible. Indeed, this improvement in signal to noise ratio over the individual images is the principal reason for combining them. The reasons for taking several short exposures and then combining them, rather than taking one long exposure, are basically twofold: firstly to avoid saturation in the CCD and secondly to allow cosmic-ray hits to be detected and removed. Cosmic-ray hits occur randomly over the frame and in general will occur in different places in different frames. Therefore, by combining two images and looking for pixels where the signal is greatly different it is possible to locate and remove the hits.

In addition to combining images of the same patch of sky, a related technique is to combine images of partly overlapping regions of sky in order to build up a mosaic2 of a larger area. This method is quite important for CCD data because of the limited field of view of CCDs. Figure 8 is an example of an image built up in this way.

In general, separate images notionally centred on the same object will not line up perfectly because of pointing imperfections in the telescope. That is, corresponding pixels in two images will not be viewing exactly the same area of sky. Such misalignments are inevitable if images taken on different nights are being combined and will occur to some lesser extent even if consecutive images are being combined. In other circumstances the frames may be deliberately offset slightly (or jittered) in order to compensate for the CCD pixels under-sampling the image or to reduce the effect of flat field errors. Before misaligned images can be combined they need to be lined-up. Conceptually, they are aligned by using stars (and other objects) in the frames as fiducial marks.

The displacement between the various images means that a region of sky which was imaged on a ‘bad’ pixel in one image may well have been imaged on a good pixel in the other images. Consequently the combined image may well contain significantly fewer ‘bad’ pixels than the individual images. However, it should be remembered that the amount of statistical noise present in any part of the combined image will be related to the total exposure time of the pixels which contributed to it. If part of the final image was visible on only one of the input images then it will have rather more noise associated with it than a part of the final image to which twenty input images contributed. This effect is often particularly noticeable around the edges of mosaics or combined images.

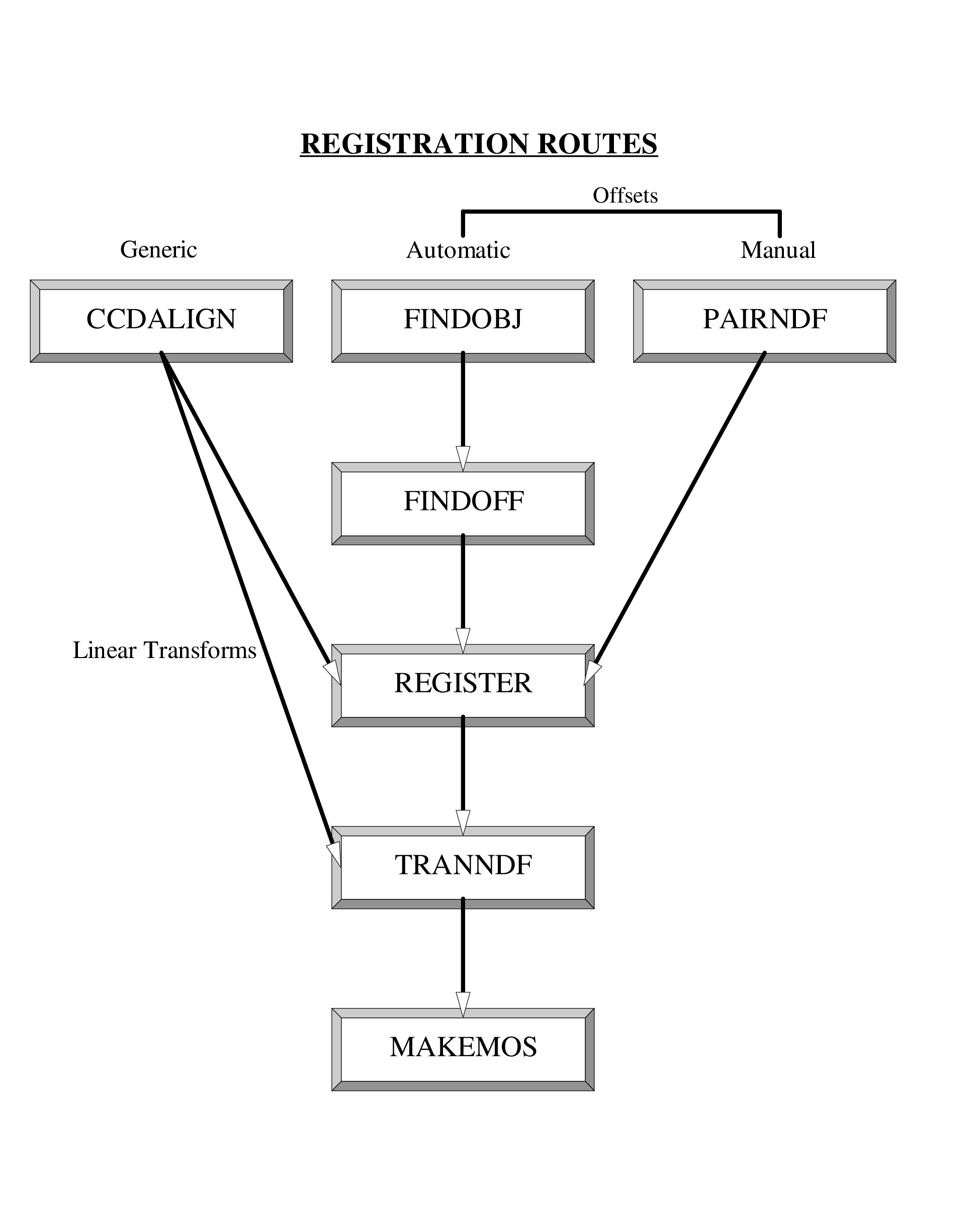

CCDPACK has various facilities for aligning (or registering) and combining images: they are illustrated in Figure 19. Which route is appropriate depends basically on how badly the images are misaligned and how crowded the fields being imaged are (that is, how many objects they contain). The usual techniques correct for the misalignment completely automatically. However, manual techniques are available as a fall-back for cases where the automatic ones fail.

In this recipe a simple x,y transformation is applied to align the two reduced images produced in the previous recipe. Consequently the following two files should be available:

Then proceed as follows.

The lists of objects found in each frame will be written to files in the same directory with names

derived from the corresponding input frame and file type ‘.find’. These files are

simple text files and, if you wish, you can examine them with Unix commands such as

more.

Two transformed, registered images are created, called:

This sequence of steps should work correctly with the example data. However, with other data

findobj or findoff may sometimes fail because they find an insufficient number of stars which

are common to the two datasets. In this case the transformation must be defined manually using

pairndf; see SUN/139 for further details.

All the images in subdirectory targets with names ending in ‘_reg’ are combined into a single

image called mosaic.sdf. The scale and zero options ensure that makemos correctly handles

images of different exposure time, air mass and atmospheric transparency. The application will

run somewhat faster if scale and zero are omitted, but if they are given it should produce

sensible output from almost any input images.

For (UKIRT) infrared data the scale option does not work well. As the frames being combined

are usually nearly contemporaneous and have the same integration time it is better to omit

scale and just use the zero option.

By default makemos combines images using a method known as ‘median stacking’. This

technique involves extracting all the input pixel values that contribute to an output image pixel

and sorting them into rank order. The median value is computed from this sorted list and

adopted as the value of the output pixel. This technique both suppresses image noise and

removes cosmic-ray hits. Other methods, such as the ‘clipped mean’, are available. See

SUN/139[10] for full details.

Because it was created from just two input images the improvement in signal to noise over the individual images is modest.

2Note that the term ‘mosaic’ is used in two similar but different ways in connection with CCD instruments. One usage is to denote images of a region of sky larger than the CCD camera’s field of view which have been constructed by combining partly overlapping image frames, as introduced here. The other usage, introduced in Section 4.1, is to describe a CCD camera in which several abutting CCD chips are arranged in a grid in the focal plane. To complicate matters further, an instrument with a mosaic of CCD chips will often be used to observe a series of partly overlapping images which are subsequently combined to form a mosaic of a larger region of sky, not least because it is often necessary to partly overlap images from a mosaic of CCD chips in order to ‘fill in’ the regions surrounding the edges of the chips which cannot be observed.