3 ECHOMOP TASKS & ECHMENU OPTIONS

The ECHMENU monolith main menu options are described in the following text. Many of these

options are also available as tasks which can be accessed from the shell. Where a task is available its

name is given in the section heading.

In each of the following sections a description is given of the task, it’s purpose, the parameters it uses

and the reduction file objects it accesses. Parameters used by the tasks are described in detail under

Parameters.

0:echhelp – HELP

Provides entry into the HELP library for browsing. Upon entry the first topic displayed will

depend upon the context and will usually be appropriate to the next default option at the

time.

To leave the HELP type a CTRL-Z, or hit carriage-return until the ECHMENU menu re-appears.

The stand-alone HELP browser echhelp can be invoked from the shell or the hypertext version of the

text can be accessed using

1: ech_locate – Start a Reduction

This is usually the first option selected and will cause the following operations to be performed:

- 1.1 (

ech_fcheck)

This option is enabled by setting the parameter TUNE_FCHECK=YES, if not set no frame

checking is performed.

The following checks are made:

- Checks frame dimensions. This simply checks that the trace and input frames have

the same dimensions.

- Checks for bad rows or columns specified in the reduction database.

- Checks for non-specified bad rows or columns. The task checks along both data

frame rows and columns detecting series of pixels of uniform intensity. These pixels

are assumed to be ‘bad’ and are flagged as such.

- Checks for saturated pixels (threshold level set by

).

- Checks for BAD values in the input and trace frames.

The trace task will ignore any pixels flagged during frame checking. This can aid the tracing of

frames containing saturated pixels, bad rows or columns, etc. which might upset the tracing

process.

- 1.2 (

ech_decos1)

Optionally check the ‘trace’ frame for cosmic rays. This option is normally switched off. It is

enabled by setting the parameter TUNE_CRTRC=YES. The approach is to apply two

median filters to the data frame. One in the X-direction (along rows) and the other

in the Y-direction (along columns). Both of these median images are then divided

into the original and the resulting image histogrammed. You then have to select a

threshold point on the displayed histogram. All pixels generating samples above the

clip threshold are flagged as cosmic rays. This method does not rely on the échelle

nature of the image and may be used on data frames of non-spectral type with some

success.

- 1.3 (

ech_slope)

Determine approximate slope of orders across the frame. A common problem when extracting

échelle orders is that they are significantly sloped with respect to the pixel rows/ columns.

echomop can cope with highly sloping orders because it works by first locating a single point in

each order and then using this as the start point for tracing the order path. echomop

also calculates the approximate slope of the orders prior to tracing, and uses this

information to predict the order position when tracing fails (usually due to contaminated

pixels).

The estimate obtained by this method is however, an average value over all orders. It is therefore

not applicable when the orders are highly distorted, or when each order has a different

slope.

The slope as calculated by the program may be overridden manually using the fiGARO SETOBJ

command:

% setobj object=-rdctn-file-name-.MORE.ECHELLE.ORDER_SLOPE value=n.nnnn

- 1.4 (

ech_count)

Count the orders and record their positions in Y at the centre (X=NX/2) of the frame. The orders

can usually be located completely automatically by ECHOMOP. The algorithm takes the

central few columns of a the trace frame and cross correlates them to provide an

estimate of the average order separation. It then uses this estimate to size a sampling

box. The central columns are then re-sampled using averaging and a triangle filter

to enhance the central peaks. The resulting data is then searched to find the peaks

representing the centre of each order. This technique can be severely affected by

cosmic rays and other high energy contaminants in the image. It is recommended

that only flat-field (or bright object) frames are used to perform order location and

tracing).

In cases where the algorithm cannot locate all the orders which are apparent by visual

inspection, you may help locate the orders manually. To control this the parameter

TUNE_AUTLOC=NO should be used (default). In which case echomop will display a graph of the

central portion of the frame and wait for interactive verification of, or correction to, the located

orders positions. For cases where you are confident that the located orders will be correct,

TUNE_AUTLOC=YES can be specified.

In many cases the location of the orders (at the frame center) can be completed automatically.

Cosmic-ray cleaning of the trace frame can be enabled using TUNE_CRTRC=YES, and bad

row/column checking by using SOFT parameter), and may be edited using the graphics

cursor.

When TUNE_AUTLOC=NO you will be presented with the following menu options:

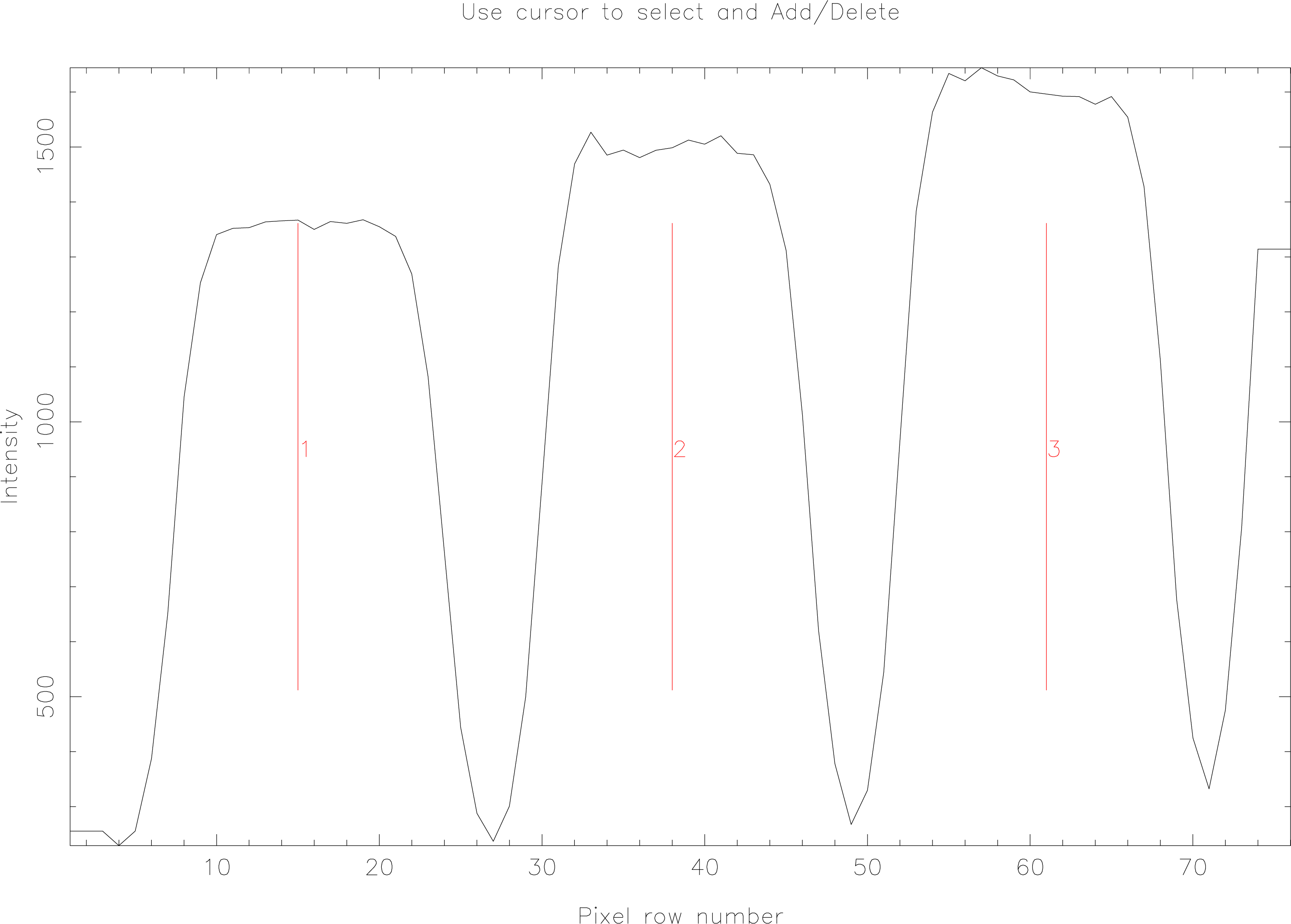

The Figure above shows an example of the order-location plot with 3 located orders.

- A(dd an order)

Marks the current cursor position as your guess at an order centre.

- D(elete an order)

Deletes the order nearest to the cursor position.

- C(lear all orders)

Deletes all the current order selections.

- R(eplot the display)

Replots the graph. The displayed orders are renumbered from left-to-right.

- E(xit)

Exit the order-location editor.

- M(enu)

Displays the full menu of options. Normally a one-line menu is displayed

summarising options.

Parameters:

Reduction File Objects:

- NO_OF_ORDERS - type: _INTEGER, access: READ/WRITE.

- NREF_FRAME - type: _INTEGER, access: READ/WRITE.

- NX_PIXELS - type: _INTEGER, access: READ/WRITE.

- NY_PIXELS - type: _INTEGER, access: READ/WRITE.

- ORDER_SLOPE - type: _REAL, access: READ/WRITE.

- ORDER_YPOS - type: _INTEGER, access: READ/WRITE.

- TRACE_WIDTH - type: _INTEGER, access: READ/WRITE.

2:ech_trace – Trace the Orders

The tracing of the paths of the orders across the data frame is often a source of difficulty as it is fairly

easy for blemishes in the frame to fatally deflect order tracing algorithms from the actual path of the

order. echomop provides a variety of options to help combat these problems. echomop order tracing

first locates the positions of the orders at the centre of the frame, and estimates the average order

slope. It uses this information to predict the existence of any partial orders at the top/bottom of the

frame which may have been missed by the examination of the central columns during order

location.

Tracing then proceeds outwards from the centre of each order. At each step outwards a variable size

sampling box is used to gather a set of averages for the rows near the expected order centre. The

centre of this data is then evaluated by one of the following methods:

- Gaussian attempts to fit a Gaussian profile across the data. Works well for bright object

frames.

- Centroid calculates the centroid of the data. Most generally applicable method.

- Edge Detects the upper and lower ‘edges of the data and interpolates. Is less accurate but

works well for difficult flat fields (e.g., saturated)

- Balance Calculates the centre of gravity (balance point). Works well for difficult data

when G and C methods are having problems.

- Re-trace Uses a previous trace as a template to predict the trace whenever it cannot be

centred. Will normally be used in conjunction with automatic trace consistency checking

to improve poorly traced orders.

- Triangle filter In addition it is possible to apply a triangle filter during tracing to help

enhance the order peaks and improve the trace. This is done by prefixing the selected

trace specifier with a ‘T’, thus TC would use triangle-filtered centroids.

The trace algorithm will loop increasing its sampling box size automatically when it fails to find a

good centre. The sample box can increase up to a size governed by the measured average order

separation.

When a set of centres have been obtained for an order, a polynomial is fitted to their coordinates. The

degree is selectable. For ideal data, these polynomials will represent an accurate reflection of the path

of the order across the frame. For real data it is usually helpful to refine these polynomials by clipping

the most deviant points, and re-fitting. Options are provided to do this automatically or

manually.

When dealing with distorted data it is often necessary to use a high degree polynomial to accurately

fit the order traces. This in turn can lead to problems at the edges of the frame when the order is often

faint.

Typically the polynomial will ‘run away’ from the required path. The simplest solution is of course to

re-fit with a lower order polynomial, however, this may not be satisfactory if the high degree is

necessary to obtain a good fit over the rest of the order.

In these circumstances, and others where one or more orders polynomials have ‘run away’, echomop

provides an automatic consistency checker. The consistency checking task works by fitting

polynomials to order-number and Y-coordinate at a selection of positions across the frame. The

predicted order centres from both sets of polynomials are then compared with each other and then

mean and sigma differences calculated. The ‘worst’ order is then corrected by re-calculating its trace

polynomial using the remaining orders (but excluding its own contribution). This process is repeated

until the mean deviation between the polynomials falls below a tunable threshold value. The

consistency checker will also cope with the ‘bad’ polynomials which can result when partial orders

have been automatically fitted.

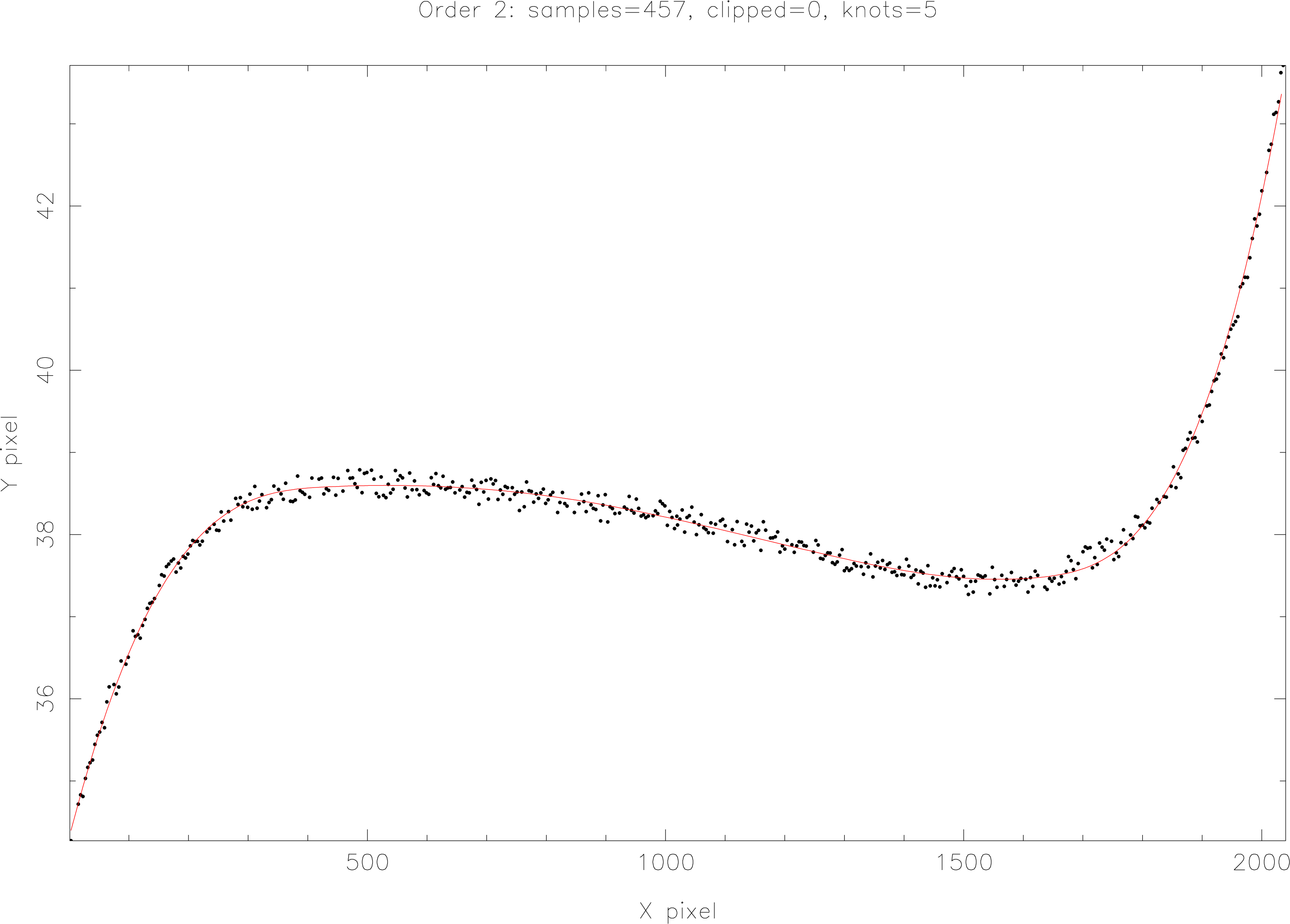

Viewing traced paths

The tracing of the échelle order paths is central to the entire extraction process and care should be

taken to ensure the best traces possible. echomop provides a large number of processing alternatives

to help ensure this can be done. Most of these provide information such as RMS deviations etc., when

run. In general however, the best way of evaluating the success or failure of the tracing process is to

visually examine the paths of the trace fitted polynomials. Three methods of viewing the traced paths

are provided.

- Viewing the fitted paths overlaid on an image of the trace frame as tracing is done (Set

parameter

DISPLAY=YES).

- Using a graphics device to plot the paths of all orders (Task ech_trplt/ECHMENU

Option 15).

For a single order, a more detailed examination of the relation of a trace polynomial to the points it

fits, can be obtained using the V(view) command in the task ech_fitord/ECHMENU

Option 3.

Parameters:

Reduction File Objects:

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- ORDER_SLOPE - type: _REAL, access: READ.

- ORDER_YPOS - type: _INTEGER, access: READ.

- TRACE - type: _REAL, access: READ/WRITE.

- TRACE_PATH - type: _REAL, access: READ/WRITE.

- TRACE_WIDTH - type: _INTEGER, access: READ.

- TRC_IN_DEV - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ/WRITE.

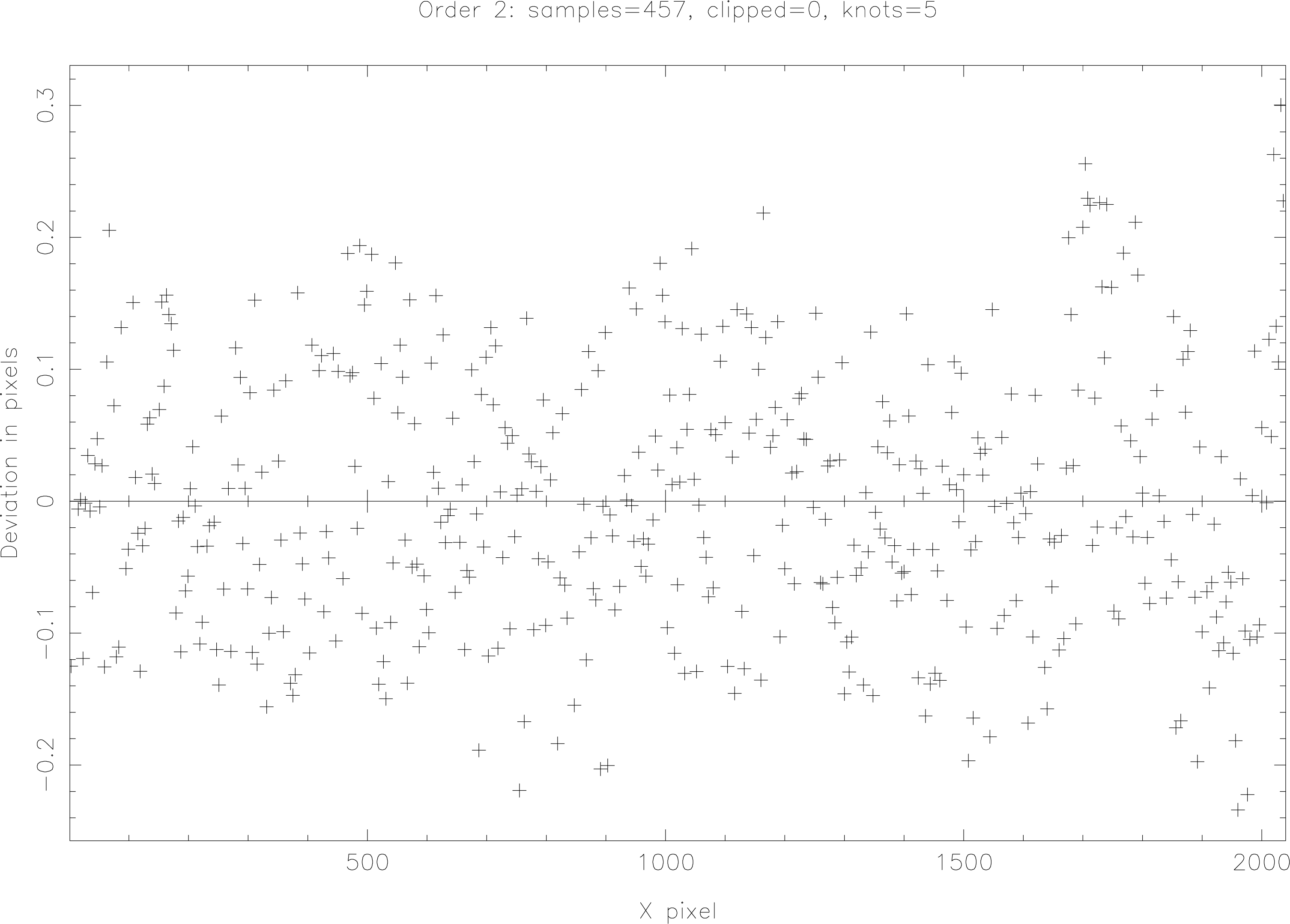

3:ech_fitord – Clip Trace Polynomials

This routine performs automatic or manual clipping of points from the set of samples representing the

path of an order across the frame. A variety of methods of manually clipping points is available, and

the degree of the polynomial used may also be altered. The relationship of the fit to the trace samples,

and the deviations, may be examined graphically.

Orders may be repeatedly re-fitted once they have been initially traced. In general an automatic fit,

clipping to a maximum deviation of third of a pixel will yield good trace polynomials. The routine

may also be used to remove sets of points which are distorting a fit, for example a run of bad centre

estimates caused by a dodgy column/row.

This routine should be used manually on single orders when they are clearly not correctly fitted. This

will usually be seen by viewing the paths of the fitted order traces using ech_trplt. In many cases it

will be possible to get a good trace fit by clipping a set of obviously bad samples, and re-fitting

(perhaps with a lower degree of polynomial).

The other main option for coping with such problems is to rely on the trace consistency checking

routine. This will produce a set of consistent trace fits in most cases. If a particular order is beyond

recovery and you do not wish to extract it at all, then it may be disabled by clipping away all of its

trace samples with this routine.

The figure above shows an example of the deviations plot after automatic clipping has been

performed on an order.

If you set TRC_INTERACT=YES then a multi-option menu will be displayed. The options

are:

- .

Clips the sample nearest to the cursor when the key is pressed.

- N(egative threshold)

Clips all samples with deviations which are more negative than the current Y-position of

the cursor.

- P(ositive threshold)

Clips all samples with deviations which are more positive than the current Y-position of

the cursor.

- C(lip)

Clips all points with absolute deviations greater than the current Y-position of the cursor.

- R(ange clip)

Allows the clipping of a set of samples denoted by a range of values along the X-axis of

the trace. This will generally be used when the order is only partially present, or perhaps

to remove the contributions of a swath of bad columns. The starting point for the range

to be clipped is the current cursor X-position, and the end point is selected by moving the

cursor and hitting any key.

- B(ox clip)

Like option R but allows you to specify the corners of a box in which all samples will be

clipped. Any two corners which define the area to be clipped can be used. The first corner

of the box to be clipped is the current cursor X-position; the second (opposite) corner is

selected by moving the cursor and hitting any key.

- A(utoclip)

Selects automatic clipping in which points will be iteratively clipped and the trace

re-fitted. The number of points to be clipped is set by TUNE_MXCLP. If this is zero, points are

clipped until the absolute deviation of the worst sample has fallen below the threshold

set by TUNE_CLPMAXDEV.

- G(o)

Used to switch from interactive clipping to entirely automatic mode. Thus after manually

clipping a couple of orders it may be observed that the orders are well traced and that

few points need be clipped, this can be left safely to auto-clipping. The Go option selects

auto-clipping for the current, and any subsequent orders.

- D(isable)

Used when it is clear that the traced samples do not follow an order at all and you wish

to prevent any subsequent processing of the order. Orders may be automatically disabled

if a small enough fraction of samples remain after auto-clipping has been done.

- O(ff)

Toggles plotting of the fits; normally a replot occurs for every key hit.

- F(unction)

Rotates the type of fit used. Currently available types are polynomial and spline.

- V(iew)

The graph used for clipping shows the deviations of each sampled trace point. The View

option shows the actual coordinates of the trace samples, providing an easy reference as

to the agreement with the order path as expected from visual examination of the raw data.

Note that View mode is mutually exclusive of all other operations and must be exited

(type any key) before clipping can be resumed.

- Q(uit)

Leaves the trace fitting for this order without saving the trace polynomial in the reduction

database. It is therefore lost.

- E(xit)

Leave trace fitting for the current order and save the latest trace polynomial in the

reduction database.

- !

Leaves the trace fitting for this order and all subsequent orders.

- +

Increments the degree of polynomial used to fit the trace samples. It may be increased up

to the maximum specified by TUNE_MAXPOLY.

-

Decrements the degree of polynomial used to fit trace samples.

Parameters:

Reduction File Objects:

- NX_PIXELS - type: _INTEGER, access: READ.

- TRACE - type: _REAL, access: READ.

- TRC_CLIPPED - type: _INTEGER, access: READ/WRITE.

- TRC_IN_DEV - type: _REAL, access: READ/WRITE.

- TRC_OUT_DEV - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ/WRITE.

4:ech_spatial – Determine Dekker/Object Limits

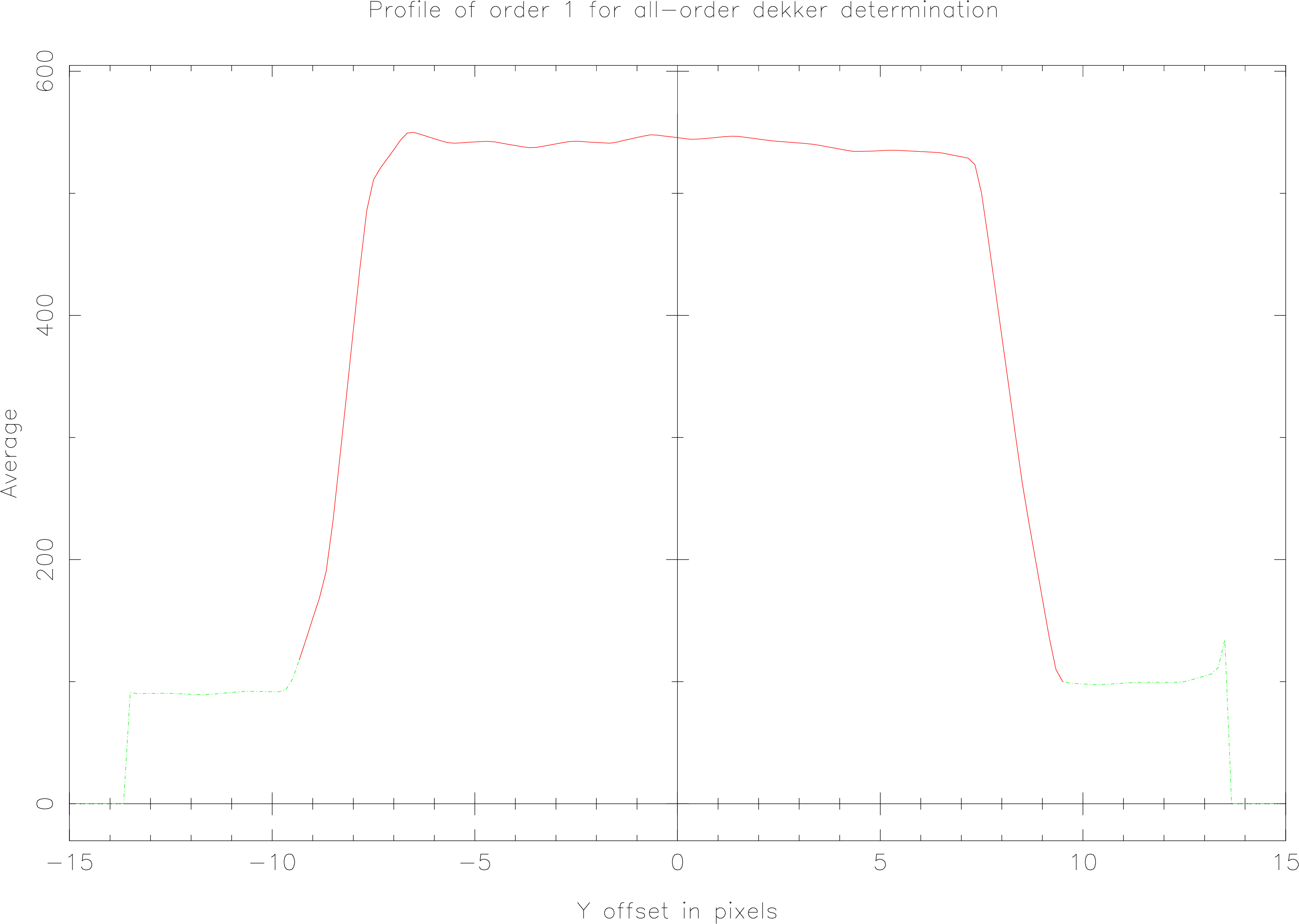

The determination of the position of the object data within the slit proceeds by first locating the slit

jaws. To do this either an ARC frame or (ideally) a flat-field frame may be used. The profile is

calculated along the slit and the edges are then located by determining the points where the profile

intensity drops below a tunable threshold. For problem cases the dekker positions may also be

indicated manually on a graph of the ARC/Flat field profile. Once the dekker limits have been

determined, the object profile is measured. The object is sampled by averaging the profile over all

orders (using the central columns of the frame only). The median intensity of the profile inside the

dekker limits is then calculated and used to set an expected sky threshold. The profile is then

examined by stepping outwards from its peak until the profile intensity falls to the expected sky

threshold. Masks are then created in which each pixel along the slit is flagged as sky or object. You

may also interactively edit these masks and flag particular sections of the profile as sky

or object. Only pixels flagged as ‘object’ will contribute to an extraction. Therefore the

profile editing provides a (tedious) simple mechanism for producing spatially resolved

spectra (each spatial increment in turn is flagged as the only object pixel in the profile, and

extracted).

The default behaviour is to average all the orders together thus generating a composite profile. In

certain circumstances it may be necessary to derive a separate profile for each order (for

instance for multi-fibre spectra). To select this option the parameter TUNE_USE_NXF=1 must be

set.

This option must be used before any extraction of the orders can take place. It consists of two

steps:

- 4.1 (

ech_dekker)

Analyse the Arc/Flat-field frame to determine the positions of the edges of the dekker.

- 4.2 (

ech_object)

Analyse the Object frame to determine the position of the object within the slit, and its

extent above and below the traced order.

In each case a plot is produced on the graphics device (specified using the SOFT parameter), showing

the status of pixels in relation to their position relative to the path of the order across the

frame.

The Figure above shows an example of the dekker plot. The regions indicated by a solid line are inside

the dekker. Pixels in positions corresponding to the dot/dash line are outside the dekker and will not

be used during processing.

The Figure above shows an example of the object limits plot. The regions indicated by a solid line are

‘object’ pixels and will contribute to the extraction. Regions shown by the dashed line are ‘sky’ pixels

which will be used to calculate the sky model. Pixels in the dot/dash region are outside the dekker

limits and will not be used during processing.

The pixels’ status as set by this option, determine what part they play when the extraction

takes place. That is, it determines if a pixel is part of the sky, object, outside the slit, or to

be ignored completely by the extraction routine. If the parameter PFL_INTERACT=YES is

set then you are also provided with the opportunity to edit these quantities on a profile

plot.

In addition the limits may be specified by using parameters, which will over-ride the values calculated

by the modules.

TUNE_DEKBLW to set the lower dekker.

TUNE_DEKABV to set the upper dekker.

TUNE_OBJBLW to set the lower object limit.

TUNE_OBJABV to set the upper object limit.

After running Option 4, the post-trace cosmic-ray locator may be run if required (Option 17). It

cannot be used before as it uses the object limits derived in Option 4.

Parameters:

Reduction File Objects:

- CONTIN_PROFILE - type: _REAL, access: READ/WRITE.

- DEK_ABOVE - type: _INTEGER, access: READ/WRITE.

- DEK_BELOW - type: _INTEGER, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJECT_PROFILE - type: _REAL, access: READ/WRITE.

- OBJ_MASK - type: _INTEGER, access: READ/WRITE.

- SKY_MASK - type: _INTEGER, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

5:ech_ffield – Model Flat-field Balance Factors

The ‘balance factors’ are the per-pixel values which are multiplied into the raw data to perform the

photometric correction required to correct for differing pixel-to-pixel responses of some detectors

(mostly CCDs).

echomop will use a flat-field frame if one is available. The flat-field frame should be produced using a

continuum lamp exposure with the instrument in an identical configuration to that used for the object

exposure (to ensure that any wavelength dependent behaviour of pixel response is taken into

account). The exposure should be of sufficient duration to attain high counts in the brightest parts of

the image.

echomop fits functions in two directions; along the traces, and along the image columns. The degree of

polynomials fitted is tunable, but the (low) default degree will normally be perfectly reasonable. Each

flat-field pixel in an order is then used to calculate a ‘balance’ factor. This is a number close to 1 which

represents the factor by which a given pixel exceeds its expected value (predicted by the

polynomial).

Note that this technique requires that the flat-field orders vary slowly and smoothly both along and

across each order.

If required many flat-field frames (with identical instrument configuration) may be co-added prior to

ECHOMOP, and the high S/N flat field used by ECHOMOP. At present no special facilities are provided

for calculating the actual error on such a co-added flat field; the expected error (derived from root-N

statistics) is what is used to calculate the error on the balance factors unless appropriate variances

are provides in the flat-field frame error array. Other modes of operation are triggered

by setting the parameter FLTFIT. If the parameter is set to NONE then no modelling in the

X-direction will be performed. If the parameter is set to MEAN, then no polynomials are used, but

the balance factors are calculated using the local mean value based on a 5-pixel sample.

This will normally be used when the flat field at the dekker limits cannot be modelled

because its intensity changes too rapidly on a scale of 1 pixel due to under-sampling of the

profile.

The full set of modelling options is:

- MEAN a local mean parallel to trace.

- MEDIAN a local median parallel to trace.

- POLY a polynomial function parallel to trace.

- SPLINE bi-cubic spline parallel to trace.

- SMOOTH Gaussian smoothing along pixel rows.

- SLOPE local slope along pixel rows.

- NONE all balance factors set to unity.

If you produce your own balance-factor frame, then this may be used by echomop. The parameter

TUNE_PREBAL should then be set to YES. In this case no modelling takes place and the balance factors

are simply copied from the frame supplied. This should be used if the echomop models do not

generate an appropriate flat-field. In cases where no flat-field frame is available then the

parameter TUNE_NOFLAT=YES can be specified; or, alternatively you can reply NONE when

prompted for the name of the flat-field frame. In either case, the balance factors will be set to

unity.

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- FITTED_FLAT - type: _REAL, access: READ/WRITE.

- FLAT_ERRORS - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ.

6:ech_sky – Model Sky Background

The sky intensity is modelled at each increment along the order. The degree of polynomial fitted is

adjustable, by default it is set to zero to obtain the ‘average’ sky behavior.

The use of polynomials or splines of higher degree is advisable when there is a significant

gradient to the sky intensity along the slit, as the polynomials are used to predict the sky

intensity at each object pixel in the order independently. Note that the meaning of ‘increment’

differs between regular and 2-D distortion-corrected extractions. For a simple extraction an

increment is a single-pixel column. For a 2-D extraction each increment is a scrunched

wavelength-scale unit, thus ensuring the accurate modelling of distorted bright emission lines in the

sky.

It is also possible to model the sky intensity in the wavelength direction using polynomials. In this

case there are parameters available to define the threshold for possible sky lines which will be

excluded from the fit (TUNE_SKYLINW and TUNE_SKYLTHR). When a wavelength-dependent model is

used it is also possible to request an extra simulation step which allows the accurate evaluation of the

errors on the fitted model (using a monte-carlo simulation). This procedure can improve the

variances used during an ‘optimal’ extraction, particularly in cases where the object is only

fractionally brighter than the sky. The simulation is enabled using the hidden parameter

TUNE_SKYSIM=YES.

The determination of which pixels are sky is done using the masks set by the profiling task or

ECHMENU option. These masks can be freely edited to cope with any special requirements as to

which regions of the slit are to be used for the sky. This facility is of particular use when processing

frames where ‘periscopes’ have been used to add in ‘sky’ regions when observing an extended source.

In such cases, echomop currently provides no special treatment and the periscope sky-pixel

positions will have to be edited into the sky mask using the task ech_spatial/ECHMENU

Option 4.

It is also possible to use a separate sky frame by flagging all pixels as sky, modelling the sky, and then

resetting the requisite object pixels (using Option 4.2) before extracting using the object

frame.

In cases where there is significant contamination of the background due to scattered light, it is possible

to use a global model of the background intensity instead. ech_mdlbck (Option 22) performs this

process and should be used instead of the sky modelling option (the two processes are mutually

exclusive).

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- FITTED_FLAT - type: _REAL, access: READ.

- FITTED_SKY - type: _REAL, access: READ/WRITE.

- FLAT_ERRORS - type: _REAL, access: READ.

- FSKY_ERRORS - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- SKY_SPECTRUM - type: _REAL, access: READ/WRITE.

- SKY_VARIANCE - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

7:ech_profile – Model Object Profile

The object profile model is constructed by subsampling the profile and may be an all order average, or

independently calculated for each order (enabled by setting TUNE_USE_NXF=1). There are also facilities

for modelling profiles which vary slowly with wavelength by fitting polynomials in the wavelength

direction (set TUNE_OBJPOLY>0).

The degree of subsampling is controlled using the parameter TUNE_PFLSSAMP which sets the number of

subsamples across the spatial profile.

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- FITTED_SKY - type: _REAL, access: READ.

- MODEL_PROFILE - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJ_MASK - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ.

8:ech_extrct – Extract Object and Arc Order Spectra

The extraction of both object and arc orders proceeds in parallel to ensure that the same

weights are used in both cases. There are three possible weighting schemes implemented

currently. All methods maintain variances and allow individual pixels to be excluded from the

extraction process by referring to the object frame quality array. Simple extraction weights all

object pixels equally and is much less computationally demanding than the other methods.

The object intensity is calculated by summing all the object pixels in each column for each

order.

Profile weighted extraction weights each pixel by a factor

where

is the calculated normalised profile

at spatial offset (sub-sampled)

from the trace centre and

is the column number.

Optimally weighted (or Variance weighted) extraction weights each pixel by the product of the calculated

profile

and an estimate of the uncertainty of the pixel intensity.

This estimate is based on the calculated variance following the scheme described by Horne in An

Optimal Extraction Algorithm for CCD spectroscopy (P.A.S.P. 1986), modified to cope with profile

subsampling associated with sloping and/or distorted orders.

In addition, the rejection of cosmic-ray-contaminated pixels has been made available as a separate

function in echomop as the package is not dedicated to CCD-only data reduction. The original

cosmic-ray rejection described by Horne has also been retained and can be enabled using the

parameter TUNE_CRCLEAN, although the dedicated CR module seems to perform better in most

cases. Optimally weighted extraction has been shown to improve S/N in the extracted

spectra by factors corresponding to up to 20% increases in exposure time, and its use is

therefore to be encouraged in most cases. The provision of sky variance modelling helps to

ensure that the optimal extraction can still perform ‘optimally’ even with very low S/N

data.

NOTE: Option 19—Quick-look

extraction is provided primarily for at-the-telescope use to permit the observer to

quickly check that decent data are being obtained. Quick-look does not use the sky

model or flat-field model and should not be used to produce spectra for further

analysis.

Parameters:

Reduction File Objects:

- BLAZE_SPECT - type: _REAL, access: READ/WRITE.

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- EXTRACTED_ARC - type: _REAL, access: READ/WRITE.

- EXTRACTED_OBJ - type: _REAL, access: READ/WRITE.

- EXTR_ARC_VAR - type: _REAL, access: READ/WRITE.

- EXTR_OBJ_VAR - type: _REAL, access: READ/WRITE.

- FITTED_FLAT - type: _REAL, access: READ/WRITE.

- FITTED_PFL - type: _DOUBLE, access: READ.

- FITTED_SKY - type: _REAL, access: READ/WRITE.

- FLAT_ERRORS - type: _REAL, access: READ/WRITE.

- FSKY_ERRORS - type: _REAL, access: READ/WRITE.

- MODEL_PROFILE - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJ_MASK - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ.

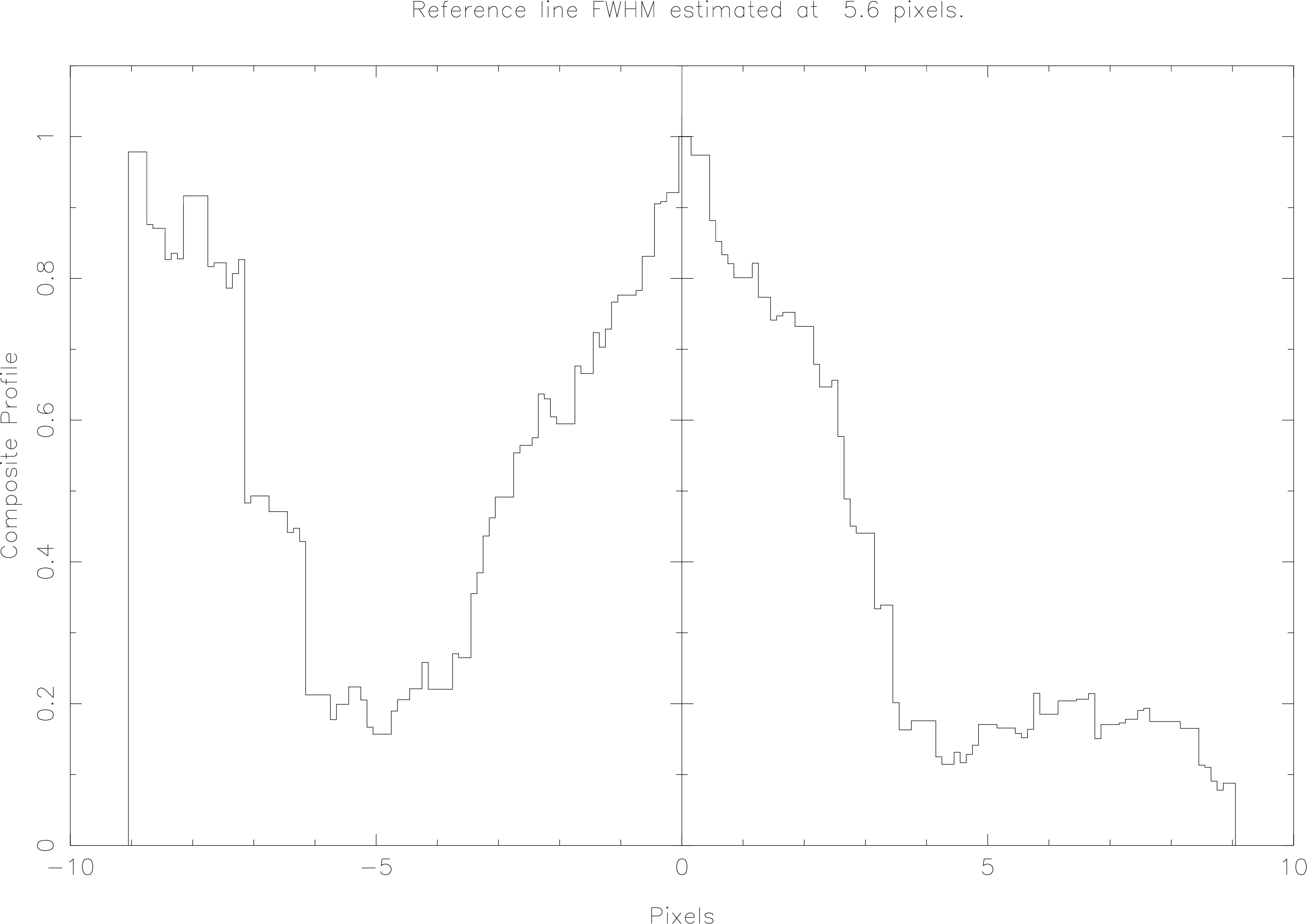

9:ech_linloc – Locate Arc Line Candidates

This option is used to locate arc line features for later identification. It consists of two steps:

The FWHM is evaluated by co-adding all possible arc line features in the arc frame, and then

averaging the resulting profile, and calculating its FWHM. The value is used to scale the Gaussian-s

which are fitted to each arc line in order to obtain an estimate of its center position (in

X).

Possible arc lines are denoted by any region of an order in which 5 consecutive pixels (P1-5) obey the

following relation:

and are amenable to a Gaussian fit with the FWHM calculated.

This ensures that even very faint features are put forward for possible identification (useful when

there are no bright lines in an entire order).

Parameters:

Reduction File Objects:

- EXTRACTED_ARC - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBS_INTEN - type: _REAL, access: READ/WRITE.

- OBS_LINES - type: _REAL, access: READ/WRITE.

- REF_LINE_FWHM - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

10:ech_idwave – Wavelength Calibrate

The wavelength calibration is done using a reference feature list, usually provided by an ARC-lamp

exposure. The routine allows any candidate features to be identified and used as ‘knowns’ for the

calibration (as position/intensity pairs). These features may then be manually identified using a

reference lamp atlas. Facilities are provided for adding/deleting lines and altering the degree of

polynomial fit performed.

An automatic line-identifier is included which operates by searching the supplied line list for

multi-line ‘features’. You may optimise the search by constraining the space to be searched in terms of

permissible wavelength and/or dispersions (in Angstroms/pixel).

In addition the program will automatically constrain the search range further if it can determine the

central order number and wavelength (by looking in the data frame header)

As soon as three orders have been successfully calibrated, the search range for the remaining orders is

re-evaluated to take this into account. In general, the automatic method will be most useful when you

are unsure of the exact wavelength range covered. When the level of doubt is such that the

wavelength scale may decrease from left-to-right across the frame, then the software may be

instructed to automatically check for this ‘reversed’ condition. Set parameter TUNE_REVCHK to YES to

check for a reversed arc; the parameter defaults to NO to avoid wasting CPU time. If the wavelength

scale is reversed then you should use fiGARO IREVX to flip all the relevant images, and then re-start

the reduction.

Finally the software is flexible as to the vertical orientation of the orders, i.e., higher wavelength

orders may be at the top or bottom of the frame (for échelle data). Calibration may be

performed using either 1 or 2 (before and after) ARC frames at present. See Arc Frames for

details.

Options are presented in a menu form and selected by typing a one or two character string, followed

by carriage return. The following options are supported:

- AI (automatic identification)

Initiates a search and match of the feature database. Any preset limitations on the

wavelength and dispersion range will be taken into account. When a solution is found, a

fit report includes a probable status. You may then refine the solution manually, or search

for further solutions by hitting the A key.

- E(xit)

Leaves the line identification menu and updates the reduction database copy of the

wavelength polynomial to reflect the latest calculated fit.

- H(elp)

Provides interactive browsing of the relevant sections of the help library for line

identification.

- IM (import ECHARC data) Provides an interface to the ECHARC arc-line identification

program. In general the XP option should first be used to export data for ECHARC

processing. ECHARC would then be run, and finally the data IMported back into

echomop.

- O(rder selection)

Used to change the currently selected order when operating manually. The order number

which is to be selected will be prompted for.

- P(olynomial degree)

Used to alter the degree of polynomial to be used for the wavelength fitting. This may

vary up to the maximum set by TUNE_MAXPOLY.

- XP (export data for ECHARC)

Used to provide an interface to the ECHARC arc line identification program. In general

the XP option should first be used to export data for ECHARC processing. ECHARC

would then be run, and finally the data IMported back into echomop.

- I(nteractive identification)

Enters the interactive line specification, examination section. This section has a menu

which provides features for addition/deletion/re-fitting/listing etc., of identified lines.

This sub-menu includes a set of interactive options to assist in identifying arc lines

and fitting the wavelength polynomial to describe the variation along an order. The

options provided are as follows and are all selected using a single character. Note that the

carriage-return key is not necessary for option selection, care is therefore needed to ensure

that the cursor is correctly positioned before a cursor dependent option is selected.

- A(uto)

Initiates an automatic search and match of the reference feature database. Any preset

limits on the wavelength and dispersion search range are taken into account. Each

possible solution is reported upon with an indication of its probable accuracy. You

may then choose to reject it, or examine it in detail for verification or modification.

- B(Clip) Automatically clips any potential blends from the set of identified lines, and

re-fits the wavelength polynomial. Possible blends are flagged using the identifier B

on the graph, and by a trailing B on the line details output during fits.

- C(lear all identifications)

Removes all identified line information for the order. The polynomial fit remains

though, and any good lines could be recovered by using the R(e-interpolate) option.

- D(elete a line)

Removes an identified line from the set of identified lines. This will usually be used

to remove a suspect line which has been incorrectly automatically identified. The

identified line nearest to the cursors X-position when the D is pressed, will be the

one which is deleted.

- E(xit)

Leaves the interactive identification/fitting routine and returns to the main line id

menu. The current set of fitted lines, and the polynomial are saved in the reduction

database.

- F(it polynomial)

Performs a polynomial fit to the positions/wavelengths of all currently identified

lines. Reports on the deviation of each line from the fit, and the improvement

possible by deleting each line and re-fitting. No check is made against the database

to see if further lines may now be identified, use the R option if this is required.

- I(nformation)

Reports information known about the nearest located line (relative to X-position of

cursor when I is pressed). Details include position, wavelength etc.

- K(eep)

Flags the nearest identified-line to be retained during re-interpolation even when

it would otherwise be rejected. Any manually identified lines will be automatically

flagged in this way. Kept lines are indicated by a K on the graph.

- L(ist known lines)

Examines the feature database to find the nearest features to the current X-position

of the cursor, assuming the current polynomial fits’ predictions about the

corresponding wavelengths.

- M(ove to coordinate)

When viewing a zoomed graph of lines, allows the central point of the plot to be

moved to any point along the X-axis. A prompt is made for the exact co-ordinate

required. Once a wavelength scale has been fitted, Move operates on wavelengths,

otherwise it operates on X-coordinates.

- N(ew line)

Used to specify a completely new feature. Should be used when you wish to identify

a line which has not been located at all by the arc line location algorithm. All located

lines brighter than the threshold (see Threshold option) are indicated by a

appearing above their peak. Identified lines additionally have the wavelength shown.

- O(utwards zoom)

Reverses any currently selected zoom factor on the displayed graph of lines.

- P(lot)

Refreshes the plotted graph.

- Q(uit)

Leaves the manual identification/fitting routine without updating the polynomial

in the reduction database.

NOTE: any newly identified lines will be saved. Only the polynomial

(which represents the outcome of an identification) is left unchanged.

- R(e-interpolate)

A polynomial fit is made to the currently identified lines. This polynomial is then used to

search the database for any new line candidates. All new candidates are then added into the

fit and the polynomial iteratively re-fitted and clipped until a stable solution is

obtained.

- S(et line wavelength)

Used to set the wavelength of a line. The line whose wavelength is to be set should

be the one nearest to the X-position of the cursor and may be an identified or

unidentified line. Use the N(ew) option to create a new line where none had been

auto-located.

- T(hreshold)

Used to set the threshold for identifiable lines. Only identifiable lines can be automatically

identified by the Auto or Re-interpolate options. The threshold is plotted on the graph as a

horizontal dotted line and is initially set according to the value of the parameter

TUNE_IDSTRNG.

- XCLIP

Used to toggle automatic clipping of poorly fitted lines. It is checked every time a fit is

performed and when set will allow the fitting module to reject lines if doing so will

significantly improve the RMS for the fit.

- Z(oom plot)

Increases the magnification of the graph used to plot the line positions. To reverse the effect

use the O(utwards zoom) option.

- + (Increment polynomial order)

Increments the degree of polynomial used for the wavelength fitting. May be increased up

to the maximum specified by the parameter TUNE_MAXPOLY.

- - (Decrement polynomial order)

Decrements the degree of polynomial used for the wavelength fitting.

-

> (Shift display right)

When a zoomed graph is plotted, will shift the viewpoint along to the right (plus X) by an

amount sufficient to show the adjacent section of the plot.

-

< (Shift display left)

When a zoomed graph is plotted, will shift the viewpoint along to the left (minus X) by

an amount sufficient to show the adjacent section of the plot. (any other key),

provides available information on the line nearest to the current X-position of the

cursor.

- =

Switches between fitting functions. Currently supported are polynomials and

splines.

- ^

Sends a copy of the current plotted graph to the hardcopy device. The appropriate device

must have been supplied at program startup (e.g. echmenu hard=ps_l).

The Figure above shows an example of the plot displayed during interactive line-identification. The

following points should be noted:

- Identified lines have their wavelength shown directly above the peak

- Possible blends are flagged with a B

- The threshold intensity for identifiable lines is shown as a horizontal dot/dash line

- The positions of line-list lines are shown along the top X-axis

Parameters:

Reduction File Objects:

- EXTRACTED_ARC - type: _REAL, access: READ.

- FITTED_WAVES - type: _DOUBLE, access: READ/WRITE.

- ID_COUNT - type: _INTEGER, access: READ/WRITE.

- ID_LINES - type: _REAL, access: READ/WRITE.

- ID_STATUS - type: _INTEGER, access: READ/WRITE.

- ID_WAVES - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- OBS_INTEN - type: _REAL, access: READ/WRITE.

- OBS_LINES - type: _REAL, access: READ/WRITE.

- ORDER_IDNUM - type: _INTEGER, access: READ/WRITE.

- REF_LINE_FWHM - type: _REAL, access: READ/WRITE.

- WSEAR_END - type: _REAL, access: READ/WRITE.

- WSEAR_START - type: _REAL, access: READ/WRITE.

- W_POLY - type: _DOUBLE, access: READ/WRITE.

11:ech_blaze – Fit and Apply Ripple Correction

This option consists of two steps as follows:

- 11.1 (ech_fitblz) Model blaze function using a polynomial.

- 11.2 (ech_doblz) Apply blaze function to extracted spectrum.

If flux calibration is not being performed it is sometimes desirable to remove the ‘blaze’ function from

the extracted spectrum to assist in fitting line profiles etc. during data analysis.

A task is provided for this purpose which operates by fitting curves to the flat-field orders. The curves

can be polynomials, splines or simple fits based on local-median values. The fits may be automatically

or interactively clipped and the resulting blaze spectrum is normalised such that its median intensity

is unity.

The normalised blaze is then divided into the extracted spectrum. It is important to remember that

this operation is performed upon the ‘extracted’ spectrum.

After a blaze function has been applied to the extracted order all its values may be reset to unity to

ensure that the order(s) cannot be re-flattened in error (TUNE_BLZRSET=YES). If the blaze is to be

re-applied then the correct procedure is to first re-extract the order(s) concerned and then re-fit the

blaze.

Parameters:

Reduction File Objects:

- BLAZE_MEDIANS - type: _REAL, access: READ/WRITE.

- BLAZE_SPECT - type: _REAL, access: READ/WRITE.

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- EXTRACTED_OBJ - type: _REAL, access: READ/WRITE.

- EXTR_OBJ_VAR - type: _REAL, access: READ/WRITE.

- FITTED_WAVES - type: _DOUBLE, access: READ.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- TRC_OUT_DEV - type: _REAL, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ.

12:ech_scrunch – Scrunch

This option is used to scrunch the extracted order spectra (and arc order spectra) into a (usually) linear

wavelength scale.

- 12.1 (ech_fitfwhm)

Fit reference line FWHM as a function of wavelength

- 12.2 (ech_wscale)

Calculate the wavelength scale

- 12.3 (ech_scrobj)

Scrunch the extracted object order

- 12.4 (ech_scrarc)

Scrunch the extracted arc orders

The scrunching of spectra into a linear wavelength scale provides exactly the same facilities

available using the fiGARO SCRUNCH program, except that it works on an order-by-order

basis.

echomop provides both global (bin size constant for all orders) and per-order scrunching options. The

global option would normally be used when it is necessary to co-add the extracted orders from

multiple data frames, and a standard bin-size is required.

Scrunching results in both a 2-D array of scrunched individual orders, and a merged 1-D array of the

whole wavelength range. A utility (Option 21) is provided to assist in the co-adding of spectra from

many frames together. This option assumes that the first frame in the reduction has been scrunched

with the required wavelength scale. It then reads a list of additional reduction database names (or

EXTOBJ result file names) from an ASCII file called NAMES.LIS. The extracted spectra from each of

these reduction files are then scrunched to the same scale and co-added into the scrunched spectra in

the current reduction file. The type of weighting during addition is controlled using the parameter

TUNE_MRGWGHT.

Parameters:

Reduction File Objects:

- BLAZE_SPECT - type: _REAL, access: READ.

- ERR_SPECTRUM - type: _REAL, access: READ/WRITE.

- EXTRACTED_ARC - type: _REAL, access: READ.

- EXTRACTED_OBJ - type: _REAL, access: READ.

- EXTR_ARC_VAR - type: _REAL, access: READ.

- EXTR_OBJ_VAR - type: _REAL, access: READ.

- ID_COUNT - type: _INTEGER, access: READ.

- ID_LINES - type: _REAL, access: READ.

- ID_WAVES - type: _REAL, access: READ.

- ID_WIDTHS - type: _REAL, access: READ/WRITE.

- NO_OF_BINS - type: _INTEGER, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NX_REBIN - type: _INTEGER, access: READ/WRITE.

- REF_LINE_FWHM - type: _REAL, access: READ.

- SCRNCHD_ARC - type: _REAL, access: READ/WRITE.

- SCRNCHD_ARCV - type: _REAL, access: READ/WRITE.

- SCRNCHD_OBJ - type: _REAL, access: READ/WRITE.

- SCRNCHD_OBJV - type: _REAL, access: READ/WRITE.

- SCRNCHD_WAVES - type: _DOUBLE, access: READ/WRITE.

- WAVELENGTH - type: _DOUBLE, access: READ/WRITE.

- WID_POLY - type: _DOUBLE, access: READ/WRITE.

- W_POLY - type: _DOUBLE, access: READ.

- 1D_SPECTRUM - type: _REAL, access: READ/WRITE.

13:ech_ext2d – 2-D Distortion Correction

Detectors such as the IPCS often cause major geometric distortions in the image created

using them. echomop provides a mechanism for modelling such distortion, and using that

model to provide corrections during the extraction process. It is also possible to generate a

‘corrected’ version of each order, for visual examination, or processing by other (single spectra)

software.

The distortion model uses a coordinate system based on X = calibrated wavelength at

trace, Y = pixel offset from trace, and is thus performed independently for each order in

turn.

The ARC frame is used to locate the positions of each identified arc line at a variety of offsets from the

trace centre. The difference between its wavelength (as identified) and that predicted by the

wavelength polynomial for its observed position is then calculated. These differences are modelled

using a Chebyshev polynomial.

Once a 2-D fit has been obtained, it is refined by either manual or automatic clipping of deviant points.

When done manually the positions of all the points being fitted (i.e., arc line centers) may be plotted in

a highly exaggerated form, in which systematic distortions of sub-pixel magnitude are readily

apparent.

As the wavelength scale produced by the distortion fitting leads inevitably to some re-binning when

the extraction takes place, it is normal to extract into a scrunched wavelength scale (e.g.,

constant bin size) and this is the default behavior of the 2-D extraction task/ECHMENU

Option 13.

This option is used to perform a full 2-D distortion-corrected extraction and is provided for

cases where the distortion of the frame is significant. The option consists of four steps as

follows:

- 13.1

Calculate wavelength scale.

- 13.2

Fit 2-D polynomials to the distortion.

- 13.3

Re-bin the order into a corrected 2-D form.

- 13.4

Extract from the re-binned form.

Distortion correction is done on a per-order basis, each order having its own distortions mapped

independently. The distortion is modelled by using a tie-point data set composing of the positions and

wavelength of all identified arc lines in the order. Thus, a wavelength calibration is a pre-requisite to

the distortion corrected extraction operation. A 2-D Chebyshev polynomial is then fitted to the

wavelength deviations of each arc-line pixel, relative to the wavelength at the trace/arc line

intersection. The polynomial is used to generate delta-wavelength values at pairs of (X,Y-offset)

coordinates, i.e.;

delta-wavelength = 2dPoly( X-pixel, Y-offset from trace )

for all X- and all Y-offsets within the dekker.

This map of wavelength delta values is then used to drive a 2-D scrunch of the order into a form

where each column (X=nn) corresponds to consistent wavelength increment.

The final step is to extract the data from this re-binned form. The extraction algorithm used is identical

to the 1-D case from this point on.

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- FITTED_FLAT - type: _REAL, access: READ.

- FITTED_PFL - type: _DOUBLE, access: READ.

- FITTED_SSKY - type: _REAL, access: READ/WRITE.

- FLAT_ERRORS - type: _REAL, access: READ.

- FSSKY_ERRORS - type: _REAL, access: READ/WRITE.

- ID_COUNT - type: _INTEGER, access: READ.

- ID_LINES - type: _REAL, access: READ.

- ID_WAVES - type: _REAL, access: READ.

- MODEL_PROFILE - type: _REAL, access: READ/WRITE.

- NO_OF_BINS - type: _INTEGER, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NX_REBIN - type: _INTEGER, access: READ/WRITE.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJ_MASK - type: _INTEGER, access: READ.

- REBIN_ARC - type: _REAL, access: READ/WRITE.

- REBIN_EARC - type: _REAL, access: READ/WRITE.

- REBIN_EORDER - type: _REAL, access: READ/WRITE.

- REBIN_ORDER - type: _REAL, access: READ/WRITE.

- REBIN_QUALITY - type: _BYTE, access: READ/WRITE.

- REF_LINE_FWHM - type: _REAL, access: READ.

- SCRNCHD_ARC - type: _REAL, access: READ/WRITE.

- SCRNCHD_ARCV - type: _REAL, access: READ/WRITE.

- SCRNCHD_OBJ - type: _REAL, access: READ/WRITE.

- SCRNCHD_OBJV - type: _REAL, access: READ/WRITE.

- SCRNCHD_WAVES - type: _DOUBLE, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- SSKY_SPECTRUM - type: _REAL, access: READ/WRITE.

- SSKY_VARIANCE - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

- W_POLY - type: _DOUBLE, access: READ.

- W_POLY_2D - type: _DOUBLE, access: READ/WRITE.

14:ech_result – Write Results File

This option provides three output formats for data reduced within echomop.

The supported formats are NDF, ASCII, and DIPSO stack. Many other file formats can be accessed by

use of the Starlink utility CONVERT. Where applicable to the data format, errors will be included. For

example, DIPSO stacks can not handle error data; NDFs can.

Object or arc spectra data may be output. Data for any of: extracted orders, scrunched orders, or

merged spectra may be used. A single order may be selected for output using the task

ech_single/ECHMENU Option 24 otherwise all-order data are output.

Parameters:

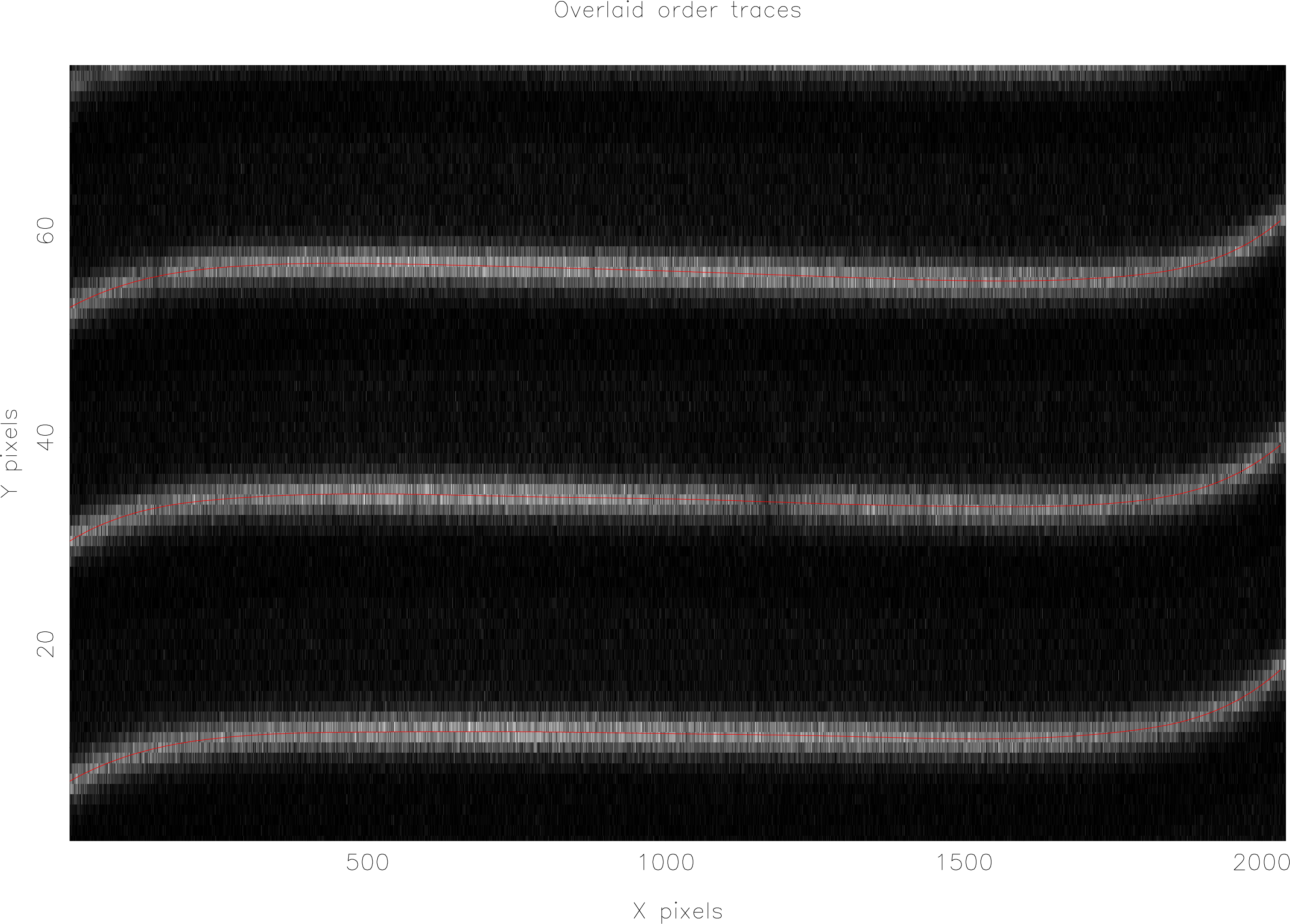

15:ech_trplt – Plot Order Traces

This option simply plots a graph with the same dimensions as the raw data frames. The graph shows

the paths of the traced order polynomials across the frame. The option should be used after

Option 2 or 3 to check that the traces are appropriate.

The Figure below shows an example of the order trace paths plot.

Parameters:

16:ech_trcsis – Check Trace Consistency

This option may be used to check the consistency of the order traces with each other. The task

predicts the path of each trace by fitting a function to the positions of the other orders at each

X-position.

The order whose trace deviates the most from the prediction is flagged as the ‘worst’ and the option to

update the path using the predictions is offered. This will allow the easy correction of

common tracing problems which can occur at the frame edges and with very faint or partial

orders.

In general it will only be effective when there are more than half a dozen orders in the frame. The

degree of variation and the consistency threshold may be set using the parameters TUNE_CNSDEV and

TUNE_TRCNS.

Parameters:

Reduction File Objects:

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ/WRITE.

17:ech_decos2 – Post-trace Cosmic-Ray Locate

This utility option should be run immediately after ech_spatial/ECHMENU Option 4 has been used

to define the dekker and object limits.

It uses information about the order paths and the spatial profile in order to do a more

effective cosmic-ray location. The sky and object pixels are processed separately in two

passes.

Each order in turn is processed by evaluating the degree to which its pixels exceed their expected

intensities (based upon profile and total intensity in the increment).

A cumulative distribution function is then constructed and clipped at a pre-determined sigma level.

Clipping and re-fitting continues until a Kolmogorov-Smirnov test indicates convergence or until the

number of clipped points falls to one per iteration. Located cosmic-ray pixels are flagged in the

quality array. Both this and the pre-trace-locator are automatically followed by a routine to do sky-line

checking. This routine can restore any pixels it judges to be possible sky line pixels (rather than

cosmic-ray hits). If there are many frames of the same object available then it is possible to use

coincidence checking to enhance cosmic-ray detection. The script decos_many will take list of input

frames and perform this checking, and flags the cosmic-ray pixels in each frame. To use it

type:

% $ECHOMOP_EXEC/decos_many

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJ_MASK - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- SKY_SPECTRUM - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

18:ech_decimg – Image Cosmic-Ray Pixels

This option uses the quality array to determine which pixels have been flagged as contaminated by

cosmic-ray hits. It then takes the original object frame and makes a copy in which all the hit pixels are

replaced by zero values. This frame should then be blinked with the original to visually assess the

success of the cosmic-ray location process.

Parameters:

19:ech_qextr – Quick-look Extraction

This option allows quick extraction of an order (or all orders) once ech_spatial/ECHMENU

Option 4 has been completed (object- and sky-pixel selection). The extraction method used is simple

sum of pixels in the dekker and the sky subtraction is done by calculating the average

value over all sky pixels in the increment. No flat-field balance factors are used. This option

should only be used to get a quick-look at the data, the spectra produced should not be used

for further analysis as much better results will be obtained by using Option 8 for a full

extraction.

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- EXTRACTED_ARC - type: _REAL, access: READ/WRITE.

- EXTRACTED_OBJ - type: _REAL, access: READ/WRITE.

- EXTR_ARC_VAR - type: _REAL, access: READ/WRITE.

- EXTR_OBJ_VAR - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- OBJ_MASK - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- TRC_POLY - type: _DOUBLE, access: READ.

20:ech_wvcsis – Check Wavelength Scales

This function performs a function analogous to that performed by Option 16 (order traces), only

operating upon the wavelength fits.

It is thus used after Option 10 has been used to calculate the wavelength scales.

The wavelength consistency check is confined to those areas beyond the range within which lines

have been identified. It therefore only corrects the very ends of the orders wavelength

scales.

These are the regions where problems are most likely to occur, as the polynomial fits can become

unstable when a high number of coefficients has been used, and there are no fitted points for a

substantial fraction of the order (e.g., first 20%).

Parameters:

- AUTO_ID - YES for fully automatic identification.

- ECH_FTRDB - Reference line list database.

- TUNE_DIAGNOSE - YES to log activity to debugging file.

- TUNE_MAXPOLY - Maximum coefficients for fits.

- TUNE_MAXRFLN - Maximum number of reference lines.

- TUNE_MXSMP - Maximum number of X-samples to trace.

- W_NPOLY - Number of coeffs of wavelength fitting function.

Reduction File Objects:

- EFTRDB_WAVELENGT - type: _REAL, access: READ.

- ID_COUNT - type: _INTEGER, access: READ/WRITE.

- ID_LINES - type: _REAL, access: READ/WRITE.

- ID_STATUS - type: _INTEGER, access: READ/WRITE.

- ID_WAVES - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- OBS_INTEN - type: _REAL, access: READ.

- OBS_LINES - type: _REAL, access: READ.

- ORDER_IDNUM - type: _INTEGER, access: READ.

- WSEAR_END - type: _REAL, access: READ.

- WSEAR_START - type: _REAL, access: READ.

- W_POLY - type: _DOUBLE, access: READ/WRITE.

21:ech_mulmrg – Merge Multiple Spectra

This utility option is provided to assist in co-adding spectra from many frames together. This option

assumes that the first frame in the reduction has been scrunched with the required wavelength

scale.

It then reads a list of additional reduction database names from an ASCII file called NAMES.LIS. The

extracted spectra from each of these reduction files is then scrunched to the same scale and co-added

into the scrunched spectra in the current reduction file.

The parameter TUNE_MRGWGHT controls the type of weighting used during addition.

Parameters:

Reduction File Objects:

- ERR_SPECTRUM - type: _REAL, access: READ/WRITE.

- EXTRACTED_OBJ - type: _REAL, access: READ.

- EXTR_OBJ_VAR - type: _REAL, access: READ.

- NO_OF_BINS - type: _INTEGER, access: READ.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NREF_FRAME - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NX_REBIN - type: _INTEGER, access: READ.

- SCRNCHD_OBJ - type: _REAL, access: READ/WRITE.

- SCRNCHD_OBJV - type: _REAL, access: READ/WRITE.

- SCRNCHD_WAVES - type: _DOUBLE, access: READ/WRITE.

- WAVELENGTH - type: _DOUBLE, access: READ/WRITE.

- W_POLY - type: _DOUBLE, access: READ.

- 1D_SPECTRUM - type: _REAL, access: READ/WRITE.

22:ech_mdlbck – Model Scattered Light

This option is used in place of Option 6 (Model sky) in cases where there is severe scattered

light contamination. It works by fitting independent polynomials/splines to each image

column (actually only the inter-order pixels). Once the column fits have been done the

results are used as input to a second round of fits which proceeds parallel to the the order

traces.

The final fitted values are saved in the sky model arrays.

This process is very CPU intensive and should not be used unless is it needed.

Parameters:

Reduction File Objects:

- DEK_ABOVE - type: _INTEGER, access: READ.

- DEK_BELOW - type: _INTEGER, access: READ.

- FITTED_FLAT - type: _REAL, access: READ.

- FITTED_SKY - type: _REAL, access: READ/WRITE.

- FLAT_ERRORS - type: _REAL, access: READ.

- FSKY_ERRORS - type: _REAL, access: READ/WRITE.

- NO_OF_ORDERS - type: _INTEGER, access: READ.

- NX_PIXELS - type: _INTEGER, access: READ.

- NY_PIXELS - type: _INTEGER, access: READ.

- SKY_MASK - type: _INTEGER, access: READ.

- SKY_SPECTRUM - type: _REAL, access: READ/WRITE.

- SKY_VARIANCE - type: _REAL, access: READ/WRITE.

- TRC_POLY - type: _DOUBLE, access: READ.

23:ech_tuner – Adjust Tuning Parameters

This option simply provides a centralised mechanism for viewing and editing the values of all the

tuning parameters known to the system.

Most of the time it is more convenient to use the -option syntax from the main menu, as this only lists

parameters used by the current default option.

In Option 23, any parameters used by the current default option are flagged with an asterix.

If, when used, a tuning parameter has a non-default value, an informational message is

displayed.

24:ech_single – Set Single-order Processing

Allows the selection of a single order for all tasks which operate on an order-by-order basis, e.g., to

re-fit the order trace for order 3, leaving all other orders unchanged, you would first use this option to

change the selected order to number 3, and then invoke the ech_fitord task/ECHMENU

Option 3.

Note that any options which operate on all orders at once (e.g., trace consistency checking) will still

operate correctly when a single order is selected, as they ignore the selection and use all the orders

anyway).

Note that when using the individual tasks the strategy for selecting single order/all order operation is

different. Individual function tasks which can operate on single orders all utilise the parameter

IDX_NUM_ORDERS.

which you should set to the number of the order to process, or to zero to indicate that all orders are to

be processed in turn. e.g.:

% ech_trace idx_num_orders=4

would just trace order number 4.

25:ech_all – Set All-order Processing

Selects automatic looping through all available orders for all tasks which are performed on an order

by order basis. This is the default method of operation.

26:ech_disable – Disable an Order

This option disables an order from any further processing. The mechanism for doing this is to remove

the order trace. If you need to re-enable an order then it should be re-traced by using Option 24 (select

single order) and then Option 2 (trace an order).

Parameters:

27:ech_plot – Run Plot Utility

Many of the temporary results arrays stored in the reduction database can be of assistance when

tracking down problems during a reduction. All of these can be graphically examined using the

ech_plot task/ECHMENU Option 27.

This utility prompts for object names for the Y-axis (and optionally the X-axis separated by a comma).

If a null object name is returned then that axis will be automatically generated using monotonically

increasing integer values.

The normal usage will be to supply only the name of the Y-axis object and leave the X-axis to be auto

generated. An exception is when plotting wavelength objects along the X-axis.

Note that unless you are interested in the first order of a multi-order array then the array indices to

start plotting from must be supplied.

e.g.: OBJ would denote the first orders’ extracted object spectra. OBJ[1,4] would denote the fourth

orders’ extracted object spectra. ARC[100,13,2] would denote the region of the second arc frames

thirteenth order starting at X-sample 100.

Note that in this last case unless the N(umber) of samples to plot has been set to less than the array

X-dimension then some samples from the fourteenth order would also be plotted.

Also provided are the following facilities most of which are selected by typing the single character

followed by carriage-return.

- B(rowse) Used in conjunction with an imaging display. The object or arc data frame

should be specified or it will be prompted for (e.g.: B MYOBJ)

echomop will display the image and then put a cursor on the display, you can then

position the cursor and type a key indicating which type of data you wish to select for

plotting.

Options include:

- O — for object order

- A — for arc order

- S — for scrunched object order

- F — for flat-field model balance factors

- S — for sky model

All arrays are plotted using the cursor position to determine which order, subsample etc. is

required. By default the full X-axis dimension is used, unless the N command is used to

explicitly set the number of samples to plot.

For example, setting N to 20 and imaging the arc frame would allow the extracted profile of

an arc line to be plotted simply by positioning the cursor on the line on the image

and hitting A, plotting a 20-sample section of the extracted arc from the requisite

order.

- D(irectory)/ FD (full directory)

Lists a directory of reduction database objects. These are not all arrays and therefore not all

plottable. The most common objects are listed by D(ir) and are all arrays.

The names are such that it is easy to recognise which arrays contain the information required.

For example the object FFLT contains the fitted flat-field balance factors for each order and trace

offset.

Specifying an object name without any dimensional specifications will plot the first N elements

starting from the beginning of the array, i.e., ARRAY[1,1,1....] to ARRAY[N,1,1....]

- E(xit)

Returns to the main ECHMENU menu or exits from the ech_plot single activity

task.

- G(raphics/Grayscale)

Toggles the plotting mode. The grayscale mode is useful for plotting swathes of 2-D and 3-D

objects. e.g.:

- in Graphics mode — FSKY[1,1,1] plots the first spatial increment of the sky model

for order 1.

- in Grayscale mode — FSKY[1,1,1] images the entire sky model for order 1. Thus

in most cases the first two indices will always be equal to 1 when using grayscale

plotting.

- H(elp)

Accesses the HELP Facility.

- I(nteractive cursor)

Sets the cursor display such that the cursor may be moved around the next graph to be

plotted and the exact data values plotted can be examined (by pressing the space

character).

- N(umber of samples)

Sets the number of data samples plotted from the arrays. Unless specified in the object name,

plotting always commences from the beginning of the array and the N is set to the maximum

number of elements in the array. Setting a smaller value effectively allows you to zoom in on a

small subsection of any array.

- L(imit setting)

Allows the X- and Y-limits of the plot to be set. The limits are normally calculated automatically

according to the data in the array. To resume auto scaling just set all the X,Y limits back to

0.

- R(ebin factor)

Sets the degree of re-binning to be performed on the data before plotting it. It remains active

until reset to 1 (indicating no rebinning). Specify a positive factor for simple summed bins, and a

negative factor to request full smoothing.

- S(tyle)

Allows the specification of various style parameters for the plots. The line styles

and colours may be specified, as may the plot style. The following keywords are

recognised and may be appended to the S to save time. e.g., S RED sets the plot colour to

red.

Keywords:

- Colours RED,WHITE,BLACK,BLUE,GREEN,YELLOW,CYAN,MAGENTA

- Line types DOTD,DASH,LINE

- Plot types POINTS,LINES,*,+,x,BINS

- Text fonts ROMAN,ITALIC

- Misc PROMPT,NOPROMPT

- U(ser-defined window)

Toggles user-windowing. When this is activated each subsequent plot will prompt the user to

select two limits on the display surface. A box based on these limits will then be used for the

plot.

This allows complete freedom in producing a set of plots. Plots may be partially overlaid,

stacked etc. Once you have produced the display required the easiest way to get a hardcopy of it

is to use the built in facilities on your workstation/X-terminal to grab it from the screen (this

normally results in a Postscript file).

- W(indowing)

Permits the division of the plotting surface into panes into which subsequent graphs are plotted.

Two factors are requested, the subdivision factor in the horizontal, and in the vertical directions.

e.g., 3 and 2 would divide the surface into two rows of three graphs. Setting both factors to 1

restores normal single graph behaviour.

- + (Overplot)

May be used either on its own or be used as a prefix to any object name. Causes the next graph

to be plotted over the previous one without re-drawing the axes etc.

- ? (Help)

The same as H(elp).

- Name of object(s) to plot

A single object name indicates that the object array is to be plotted along the Y-axis using a

default linear scale as the X-axis.

Two object names separated by a comma indicates that the two arrays are to be plotted against

each other.

28:ech_menu – Display Full Menu

This option selects the display of the full menu of options available in ECHMENU top-level menu. By

default only the utility options and the currently most likely selections will appear on the

menu.

29:ech_system – Do System Command

This option allows the execution of one or more system level commands without leaving and

re-starting the ECHMENU shell task.

The command is prompted for with:

you should then enter a command. If more than one command is required then the csh command

should be given to initiate a fully independent process.

This process must be terminated by a CTRL-D in order to return to the ECHMENU shell task. This

option can be used to perform system commands like ls and also fiGARO commands such as IMAGE

etc. However, echomop commands which change parameter values will not operate perfectly because

the monolith already has the echomop parameter file open.

System commands can also be entered directly at the Option: prompt by making the first character a $.

Thus:

would execute the system ‘directory’ command, and return to the main menu.

30:ech_genflat – Output Flattened-field

This option was introduced at echomop version 3.2-0. The flat-field balance factors produced by

ech_ffield are written to an image which can then be inspected, for example using KAPPA

DISPLAY.

Parameters:

31:ech_exit – Exit ECHMENU

This option exits the ECHMENU shell task. It is also possible to select this option by typing either

EXIT, QUIT, a single ‘E’ or ‘Q’, or 99, followed by carriage return.

Copyright © 2003 Council for the Central Laboratory of the Research Councils